import tensorflow as tf

from tensorflow import keras

import matplotlib.pyplot as plt

import numpy as np

from tensorflow.keras.layers import Dense

from tensorflow.keras.models import SequentialBasic Deep Learning

Load dataset

The datset is the MINIST Digist dataset obtained from the Keras package.

# Load the MNIST Dataset

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()Training and test set sizes

# Print training set size

n_samples_train = x_train.shape[0]

print(f"Training set size: {n_samples_train} images")

n_samples_test = x_test.shape[0]

# Print test set size

print(f"Test set size: {n_samples_test} images")Training set size: 60000 images

Test set size: 10000 imagesData Preprocessing

# Reshape the data to flatten the images

x_train_flat = x_train.reshape((n_samples_train, -1))

x_test_flat = x_test.reshape((n_samples_test, -1))

# Normalise pixel values to be between 0 and 1

x_train_flat = x_train_flat / 255.0

x_test_flat = x_test_flat / 255.0

# One-hot encode the labels

y_train = keras.utils.to_categorical(y_train, num_classes=10)

y_test = keras.utils.to_categorical(y_test, num_classes=10)Key findings

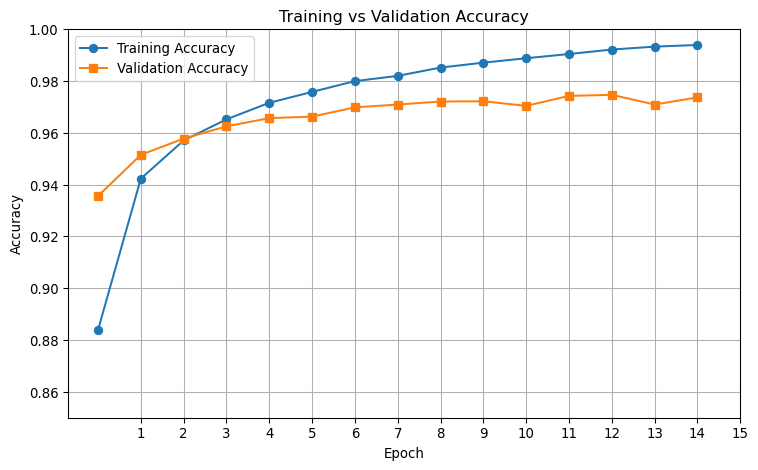

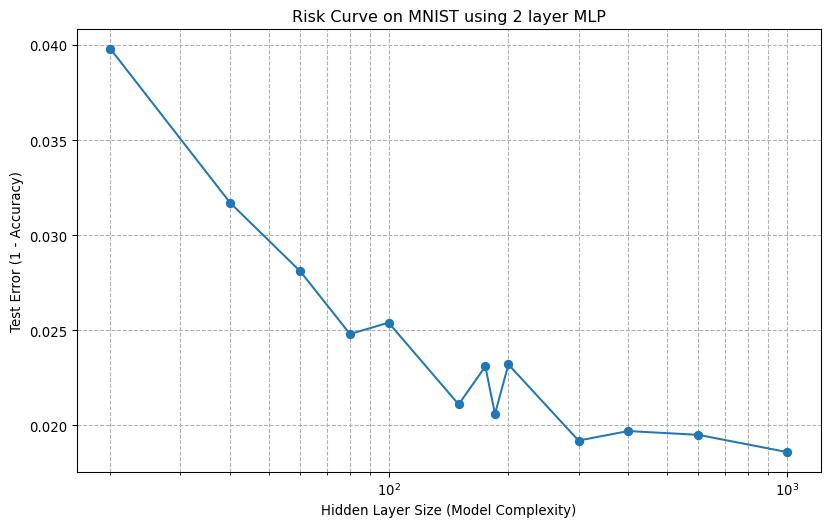

Generally, increasing the hidden layer size (Q4) improved accuracy, while increasing the number of hidden layers (Q3) beyond 2 layers did not. Performance generally improves with layer size but can degrade with excessive layers.

Double Descent Curve

# A wide range of hidden layer sizes to see the full curve

hidden_sizes = [20, 40, 60, 80, 100, 150, 175, 185, 200, 300, 400, 600, 1000]

test_errors = []

for size in hidden_sizes:

print(f"Training a two-layer network with hidden layer size: {size}")

# Create a two-layer network

model = Sequential([

Dense(size, activation="relu"),

Dense(size, activation="relu"),

Dense(10, activation="softmax")

])

model.compile(

optimizer="adam",

loss="categorical_crossentropy",

metrics=["accuracy"]

)

model.fit(x_train_flat, y_train, epochs=20, batch_size=128, verbose=0,

validation_split=0.2)

# Evaluate and store the test error (1 - accuracy)

loss, accuracy = model.evaluate(x_test_flat, y_test, verbose=0)

test_errors.append(1 - accuracy)Training a two-layer network with hidden layer size: 20

Training a two-layer network with hidden layer size: 40

Training a two-layer network with hidden layer size: 60

Training a two-layer network with hidden layer size: 80

Training a two-layer network with hidden layer size: 100

Training a two-layer network with hidden layer size: 150

Training a two-layer network with hidden layer size: 175

Training a two-layer network with hidden layer size: 185

Training a two-layer network with hidden layer size: 200

Training a two-layer network with hidden layer size: 300

Training a two-layer network with hidden layer size: 400

Training a two-layer network with hidden layer size: 600

Training a two-layer network with hidden layer size: 1000# Plotting the result

plt.figure(figsize=(10, 6))

plt.plot(hidden_sizes, test_errors, marker="o", linestyle="-")

plt.xscale("log")

plt.title("Risk Curve on MNIST using 2 layer MLP")

plt.xlabel("Hidden Layer Size (Model Complexity)")

plt.ylabel("Test Error (1 - Accuracy)")

plt.grid(True, which="both", ls="--")

plt.show()